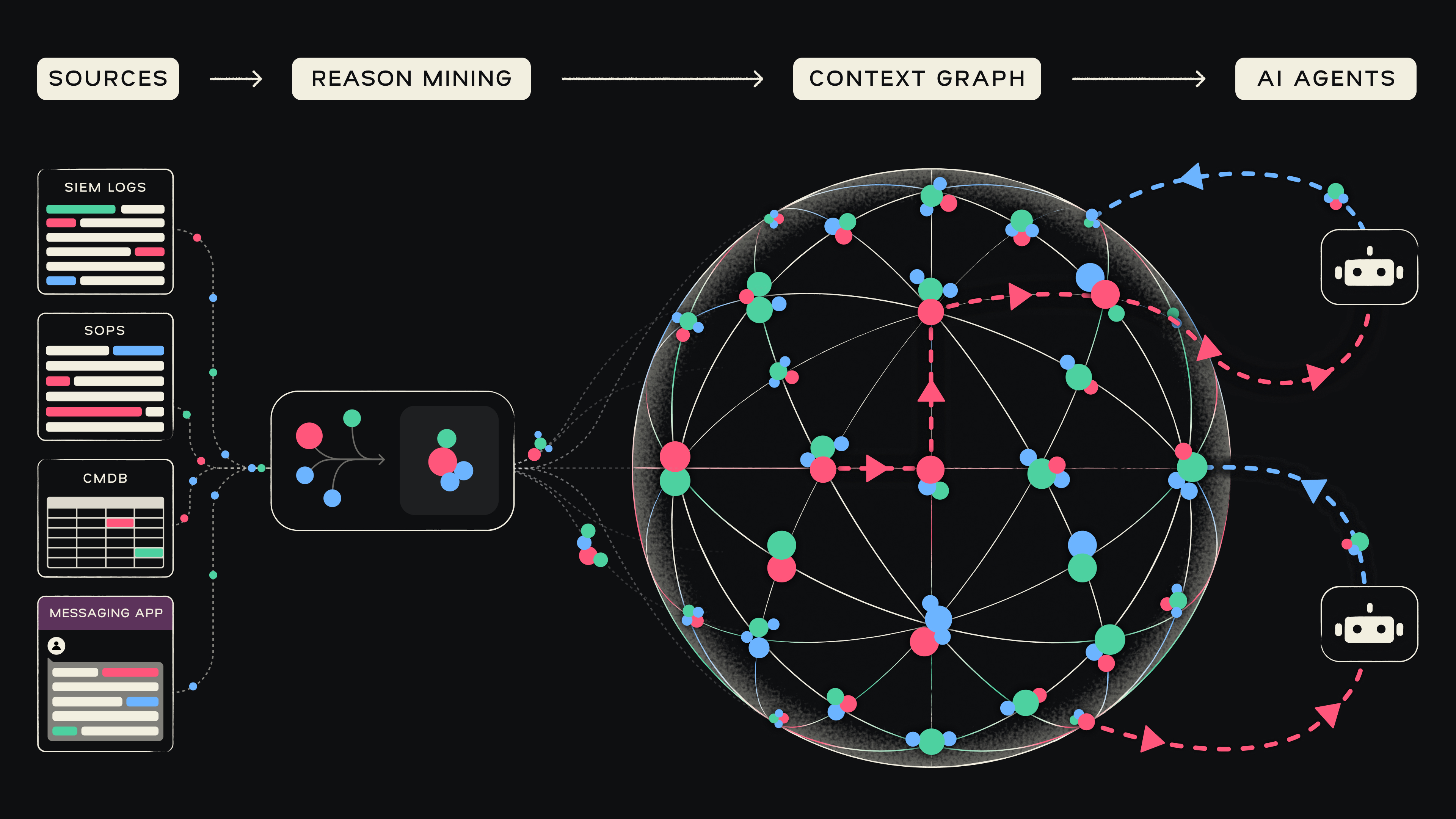

Introducing: the Security Context Graph

We are witnessing the AI SOC revolution as we speak.

AI is slashing alert queues, increasing focus, and speeding up SOC work like never before. Overloaded Tier-1 analysts are being elevated to AI engineers - they are happier!

I personally see this on every customer call I attend.

And yet, when I meet a CISO for the first time, I can feel the mistrust. They have piloted AI in their SOC and were burned with a bad experience: agents taking months to learn, confidently generating wrong verdicts, and requiring more “babysitting” than the SOAR they were meant to replace.

Before we started Mate, my co-founders and I worked at some of the companies that built the earlier AI SOC agents. We saw why they were failing, and it wasn't the agent, it was the data.

AI Agents are fed data structured for humans.

This is not surprising. Throughout history, every time a disruptive technology was introduced to scale human labor, we had to rearrange the environment - originally structured for humans, for the new technology to work. Most AI solutions are missing that part.

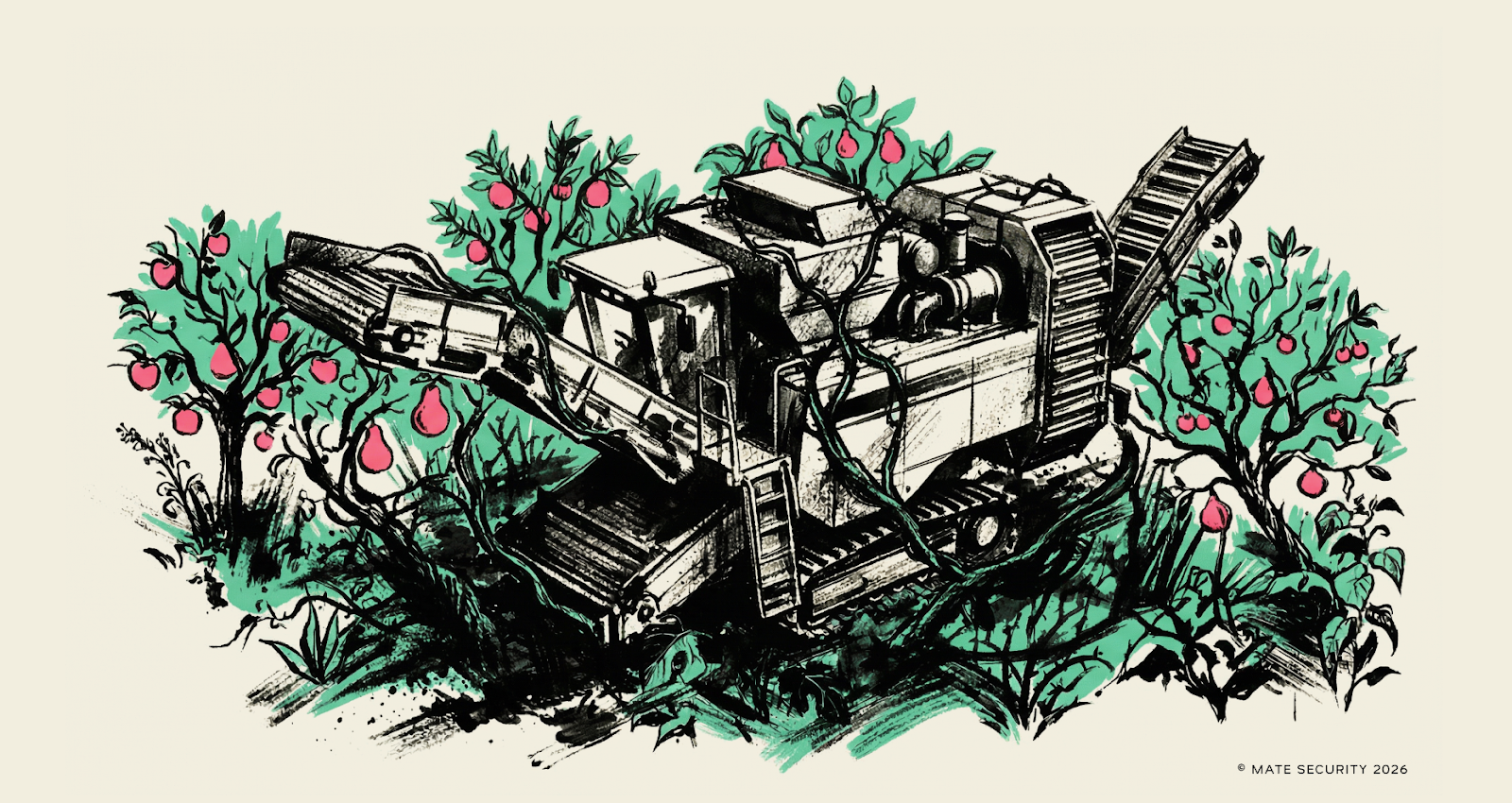

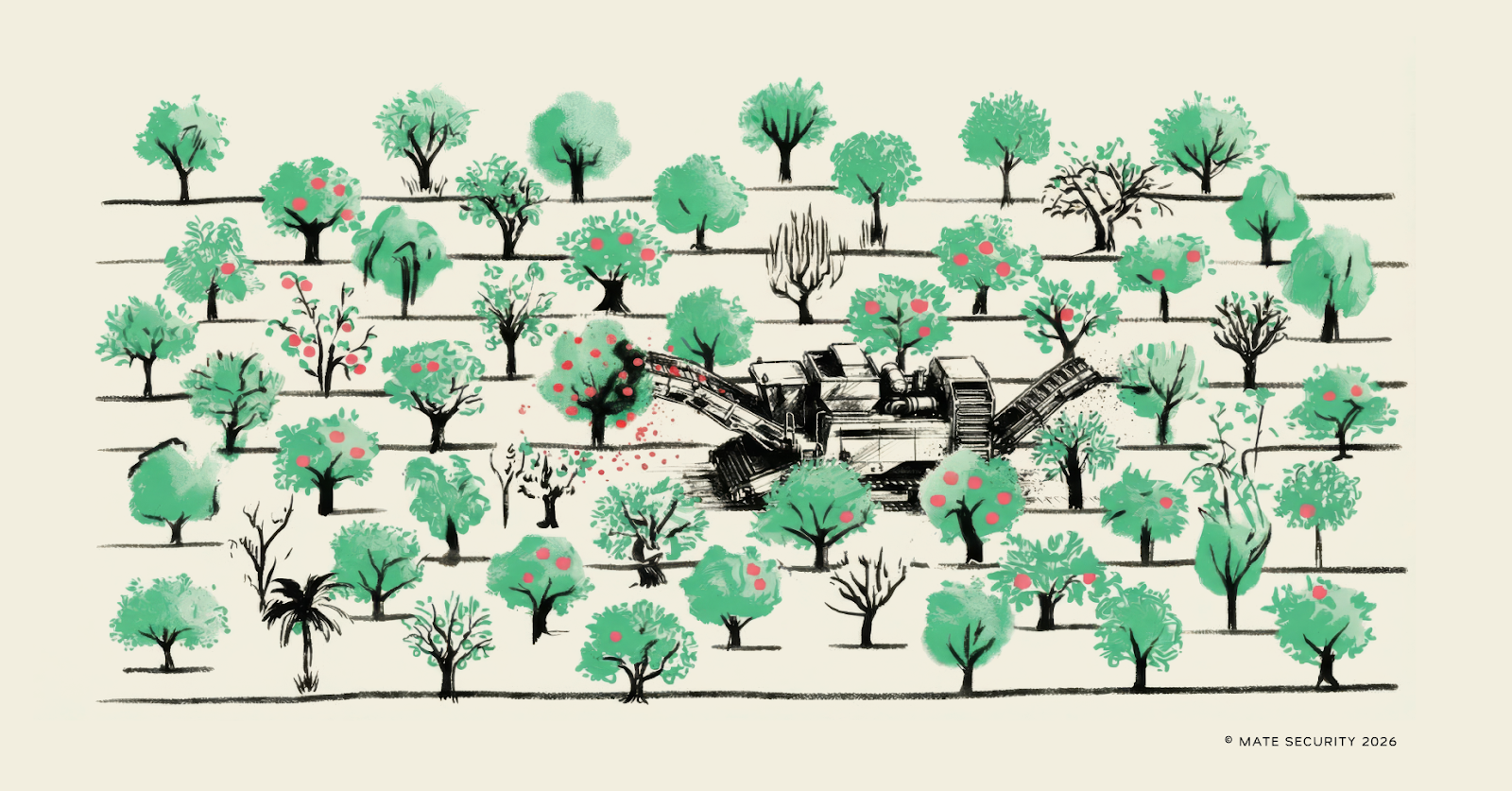

Take this example from a completely different domain - agriculture:

The harvester breaks, until you restructure the grove around it; only then does it scale.

And this is exactly the case with AI.

We have a massive SOC challenge, vendors have rushed to build AI agents to solve it, but no one has ever stopped to think about building a data structure to make these agents work.

SOC analysts work with tables, logs, and documents, they communicate over Slack, MS Teams or phone. They rely on their experience and common sense to connect the dots. But AI cannot do that. AI agents need more than tables and documents to draw conclusions. They need more than the ‘what’ - they need the ‘why’”: the operational context.

For example: an experienced analyst knows that emails from training@acme.com are typically simulated phishing. The analyst knows this because it was communicated in a Slack conversation, or in a ticket closed as a "phishing exercise”. The analyst will understand the context and remember this “memory” for next time, but AI will not.

This is where AI starts hallucinating.

Getting AI SOC agents to reason like experienced analysts requires a paradigm shift in the way we arrange data. We need to restructure data for AI consumption, using operational context - “memories” as our new building block.

This is why we have built the Security Context Graph.

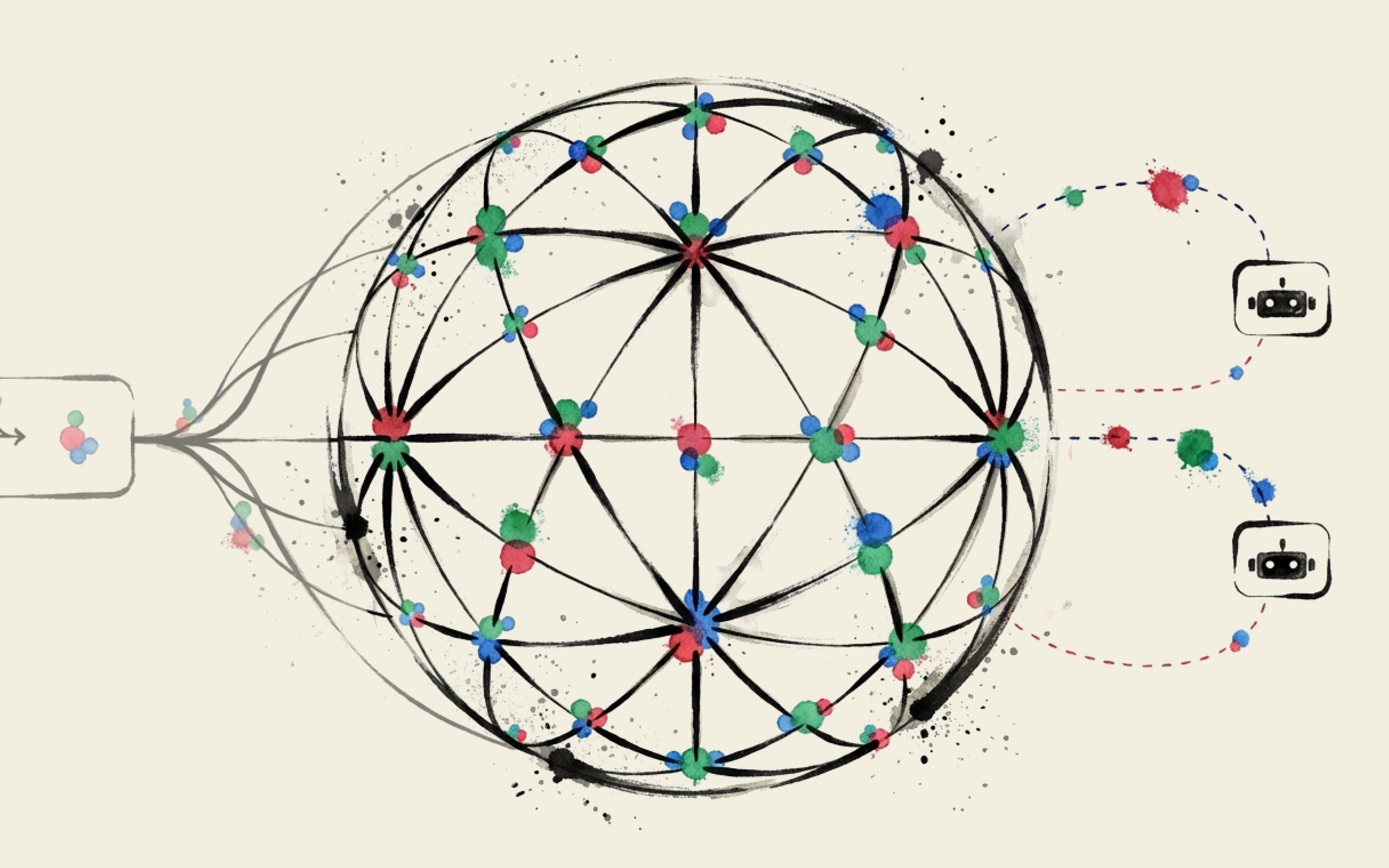

The underlying foundation for our agentic AI platform.

The Security Context Graph is a graph of memories, capturing SOC knowledge just like an experienced analyst sees it. AI agents can now easily traverse the Graph to fetch precise answers to complex investigations. It is a living and breathing structure, dynamically rebuilding and optimizing with every investigation, every ownership change, every policy change, so decisions are made according to what’s relevant right now.

Before we started building our first agent we spent months building the technology for transforming SOC knowledge, residing in multiple formats and sources into the Security Context Graph. This data first approach is what makes Mate fundamentally different and more accurate. And our customers are seeing the impact.

We are consistently hearing these four messages from our customers.

- Accurecy: our agents “get it right” more often: agent reasoning dramatically improves, with agents now capable of answering complex questions with precision.

- Consistency: because the Security Context Graph is designed to provide a single point of truth, verdicts are much more consistent while most AI agents are unpredictable.

- Transparency: by restructuring data to an analyst’s point of view, an agent can easily explain the reasoning behind a verdict. And it allows agents to occasionally admit: “I ran the investigation and I am 70% sure - this is the data I need from you to be more confident”.

- Adaptability: agents are resilient to the frequent changes in policies, ownership and best practices typical of a SOC. This is because the Graph is continuously constructed and adjusted to the most recent organizational context.

We have built our product on the foundation of the Security Context Graph because we recognized that agents are only as effective as the data structure on which they are built.

This is the only way for AI to earn trust.

.avif)