Keep AI on the Leash

Would you take a presentation generated by GPT and show it directly to your board? Of course not. So why do we keep talking about "autonomous SOCs" like humans are the bottleneck rather than the quality control?

The cybersecurity vendor ecosystem has fallen into automation theater. Every SOC platform vendor promises "autonomous operations" and "lights-out SOCs." But here's the uncomfortable truth: the most effective AI agent implementations aren't the ones that eliminate human judgment but the ones that make human verification seamless and powerful.

The Autonomous SOC Fantasy

At every security conference, I hear the same vendor pitch: "Our AI agents completely automate threat investigation and response." But dig deeper, and you'll find that even with these "autonomous" SOC platforms, the critical decisions still require human judgment. The problem is that humans are often doing this through clunky interfaces, reviewing endless AI escalations, or discovering agent mistakes after automated responses have already executed.

The dirty secret? Even the vendors selling autonomous SOCs don't run them that way internally. They have humans reviewing AI agent decisions, humans validating escalations, humans providing context that no algorithm can capture. But they hide this behind the mythology of "autonomous operations" because that's what the market supposedly wants to hear.

Why Current Human-AI Collaboration Sucks

Most SOC platforms treat humans as glorified reviewers of AI agent decisions. The AI agent investigates an alert, decides to escalate or close it, and the human's job is to review each escalation and mark it "valid" or "false positive." Over and over.

The Classic Anti-Pattern:

- ML detection systems generate 1000 alerts daily

- AI agents investigate each alert

- AI agents escalate 200 alerts as "threats"

- Human reviews each escalation individually

- Human finds 180 are false positives

- AI agent learns nothing

- Tomorrow: same pattern repeats

This isn't collaboration it's digital quality control work.

What Good Human Verification Actually Looks Like

The best human-AI collaboration systems don't ask humans to classify AI outputs they ask humans to validate AI reasoning while the AI brings fresh investigative perspectives that humans might miss.

Instead of: "Is this escalated alert a real threat? Yes/No"

The AI should say:

"This looks like normal admin activity, but I noticed it coincides with three other 'normal' activities across different systems that together form an unusual pattern. Could this be a coordinated insider threat?"

Instead of: "Review these 47 escalated alerts individually"

The AI should say:

"I've grouped these escalations by investigation pattern. The common thread is unusual file access during off-hours, but all from users with legitimate admin rights. Should we investigate if their accounts are compromised?"

Instead of: "Approve/deny this automated response plan"

The AI should say:

"The malware signature is known, but the delivery method is novel. I'm seeing similar delivery patterns in threat intelligence from three other organizations this week. Should we investigate if this is part of a broader campaign?"

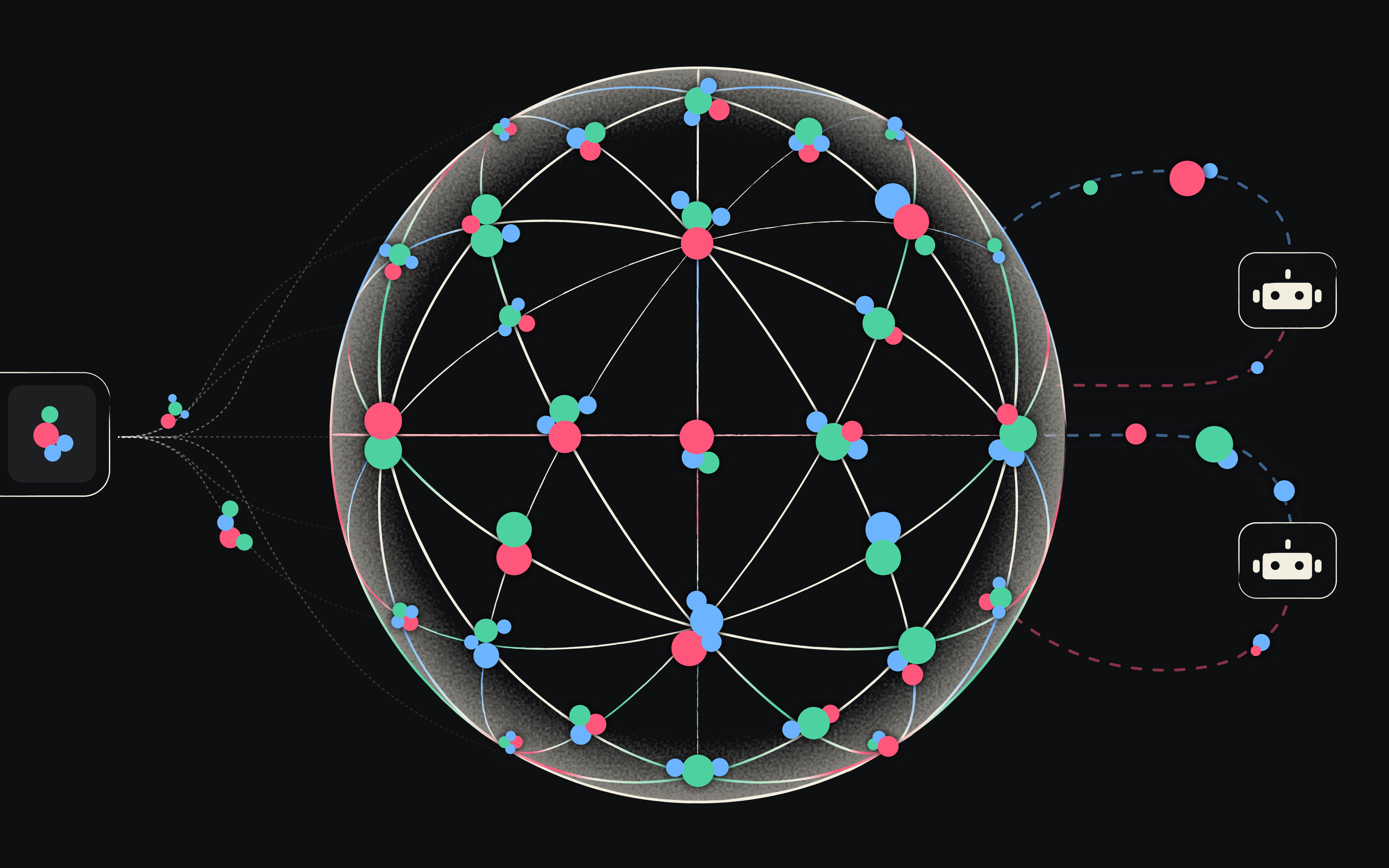

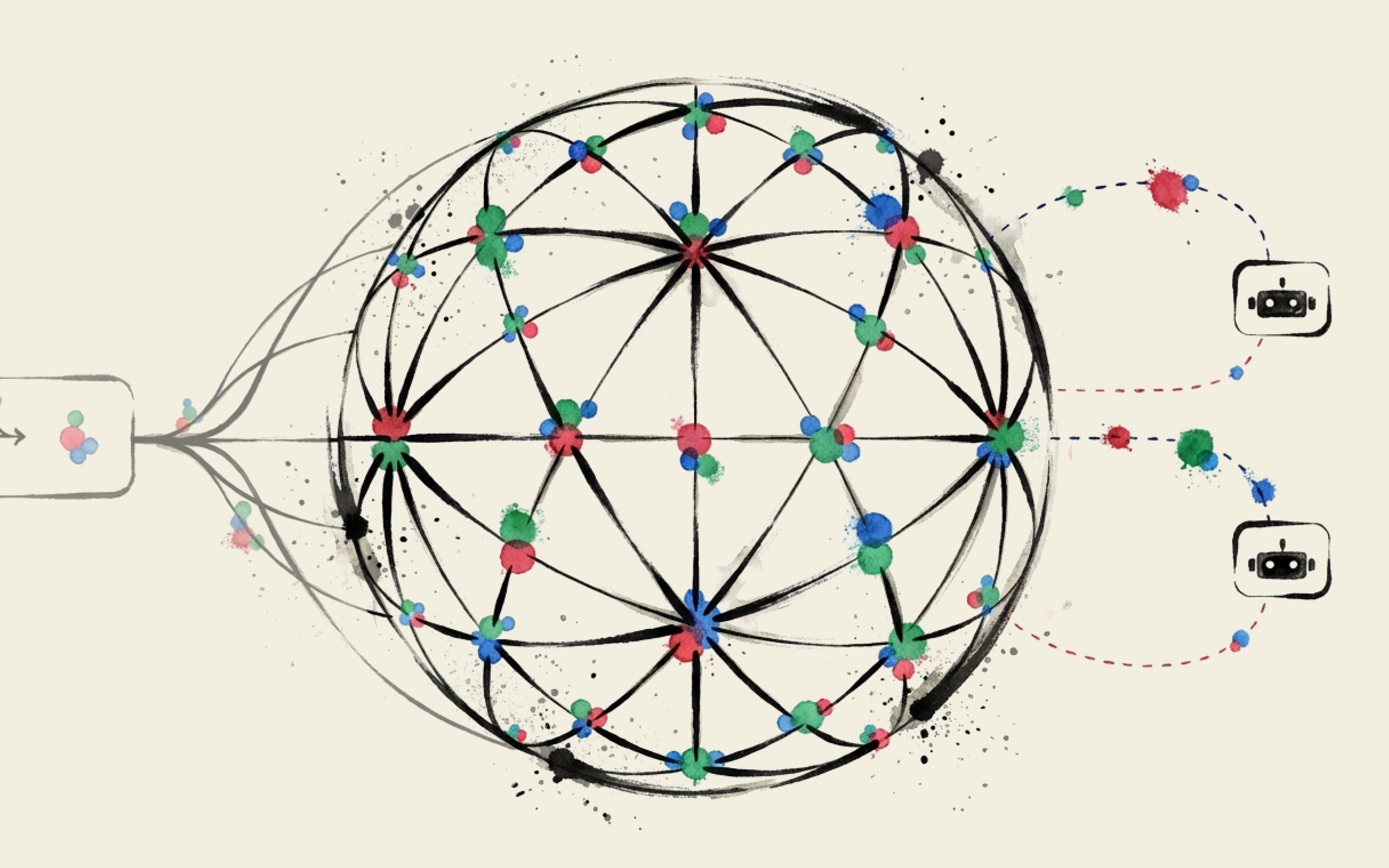

The key insight: The AI brings computational power to spot patterns across vast datasets and correlations that humans would never notice. Humans bring business context, intuition, and judgment calls about risk tolerance. Together, they catch threats that neither would find alone.

The Leash Principle: Control Enables Power

When you design proper human verification into your AI agent systems, you can let the agents be more aggressive in their investigations, more creative in their threat hunting, more willing to dig deeper into suspicious patterns. Because you know there's a quality control mechanism that prevents disasters.

Without the leash: AI agents must be extremely conservative in escalations to avoid overwhelming humans.

Result: boring, safe automation that misses sophisticated threats.

With the leash: AI agents can be bold and thorough because humans will catch the context misses and business logic errors.

Result: deeper investigations, novel threat discovery, higher-quality escalations.

Making Verification Seamless

The key is making verification feel like natural workflow enhancement, not additional work:

Pattern-Level Feedback

Instead of individual escalation review, show:

"I've investigated this user behavior pattern 47 times this month. I keep escalating it because [reasoning], but you keep marking it benign. What am I missing about the business context?"

Learn from Overrides

When humans override agent decisions, capture the reasoning:

"I disagree with this escalation because this user has admin privileges and this is normal for their role" becomes a new context rule the agent applies automatically.

Show Confidence and Reasoning

"I think this requires escalation because:

- User accessed sensitive files outside normal hours [confidence: 95%]

- Access pattern doesn't match historical behavior [confidence: 78%]

- Files aren't related to their role [confidence: 60%]"

The Compound Effect

Here's what happens when you design human verification correctly: the AI agent gets smarter faster. Because instead of just collecting escalation approvals, you're capturing human reasoning about what constitutes a real threat in your specific environment.

Over time:

- Verification becomes faster because the agent surfaces genuinely concerning patterns with the context humans need

- Humans become more effective because the agent handles routine investigation work

- Security improves because you're combining AI's computational power with human business context and judgment

The Real Competitive Advantage

While your competitors chase the autonomous SOC fantasy, you can build something more powerful: human-AI agent collaboration that actually works. Systems where humans want to work with AI agents because it makes them more effective, not systems where humans reluctantly review endless escalations.

The goal was never to eliminate humans from security operations. The goal was to make human analysts superhuman by giving them AI agents that bring novel insights while preserving human judgment where it matters most.

Keep the AI agents on the leash, and watch both human and artificial intelligence reach new heights.