Why We Built the Security Context Graph

AI SOC agents keep failing, and everyone's blaming the models. We think they're wrong. The problem is data, and we built a new architecture to fix it. Our CEO Asaf covered the announcement - here I want to get into the why.

What’s Breaking AI SOC Agents?

Before we founded Mate, we knew that the SOC was the place for AI disruption, however, we were continuously hearing that the “first wave” of AI SOC agents was not trustworthy enough. It felt like these agents needed significant time to ramp up and nevertheless were not able to deliver precise, consistent verdicts and could not be trusted to run investigations. We decided to look into that problem.

I was part of the first Microsoft Security Copilot team, so I had a front-row seat to what works and what doesn't when you point AI at security operations. When looking at the existing agents we quickly realized that this was not an agent problem, it was a data problem. Agents were failing because the data was structured for human cognition, not for machine reasoning, and it was missing crucial information for agents to be able to actually get the right results.

CMDB tables, Splunk logs and Confluence documents “work well” (haven’t met anyone loving those yet) for analysts, but AI agents consume information differently, and this mismatch showed up as errors, hallucinations, or brittle behavior. This is because humans can connect the dots and generate context out of facts - generate the “why” from the “what”, but AI cannot. AI needs this “why” generated for it to be accurate.

Take this example: when a case is closed with a comment that says “This domain is known-benign, it is used by the finance team.” analysts maintain in their head:

- Why the domain is benign (a vendor, SaaS, internal app)

- Who uses it and under what conditions

- When it would become suspicious (new geos, new privileges, off-hours)

Humans will connect the dots and treat this known benign as conditional trust, not as an absolute truth. But agents will typically interpret this as a whitelist with no expiration or scope. They will trust the domain across all users, hosts, and times and will not re-validate this scenario. This will lead to false negatives in similar cases.

This decision logic, the context, lives outside texts, it resides in the memory of experienced analysts. What makes things worse is that when these analysts leave, these memories leave with them.

When building our product we knew that we had to start with reconstructing data for AI consumption. Capturing these pieces of context, these memories, in a new form that AI can use. And if we are successful this would also ensure that when analysts leave - this institutional memory stays within the organization.

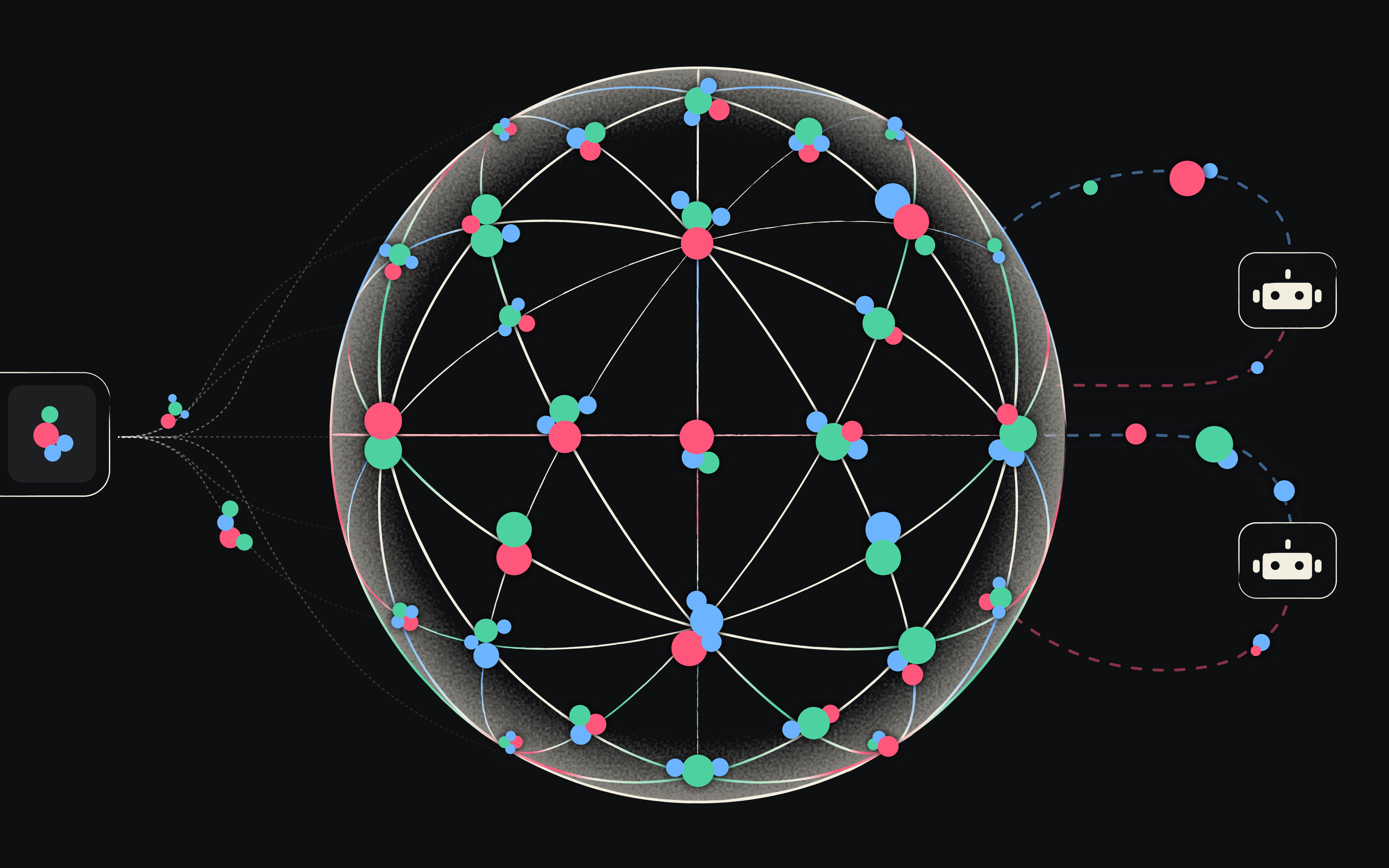

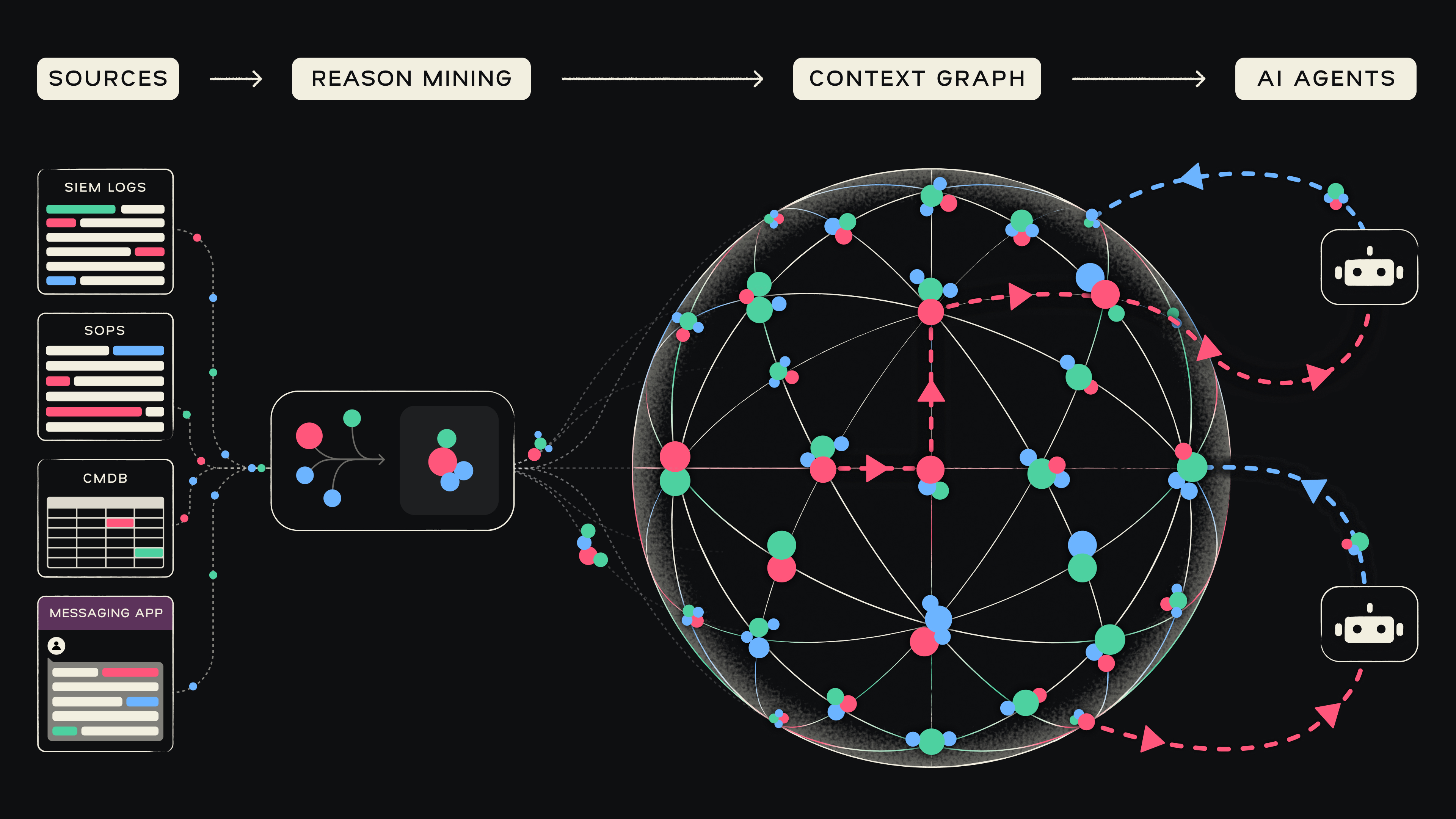

We called this new architecture the Security Context Graph. The underlying structure empowering Mate’s AI agents.

Context is the New Building Block

Before we even started working on our agent we spent months designing the Graph architecture and the mechanisms to convert institutional knowledge into the new format.

Our first realization was that the new building block had to be context - this is the “hop” that analysts do in their minds, but we needed to do this for AI. And then - it becomes an organizational asset.

If the old building block was an exception, a rule, a Splunk log entry, or a JIRA ticket, the new approach would be to look across a broad range of sources, including security tools, SOPs, policies, chats, etc.

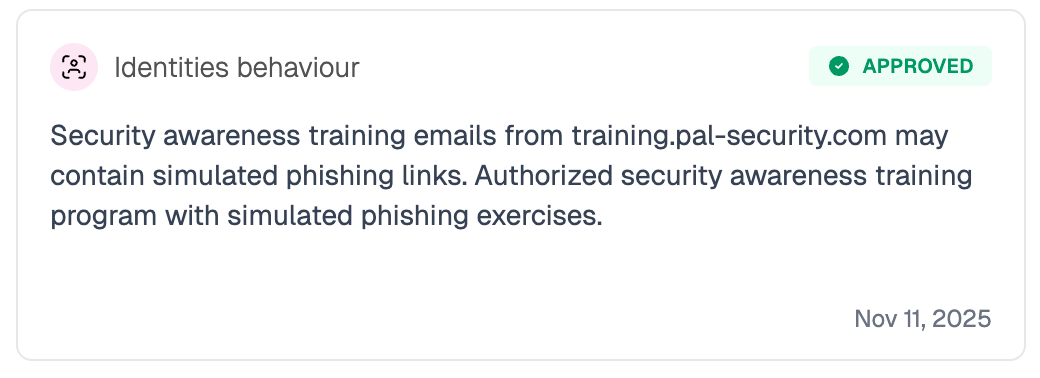

then create a new entity which we call a Memory. In our product It looks like this:

The security context graph stores thousands of memories, making it the equivalent of your organization's tribal knowledge: the combined memories, experience and intuition of your most experienced SOC team members.

We enhance these pieces of context with global knowledge (timely threat intel, best practices, tool documentation etc), just like a good SOC analyst would - but at scale.

Reason mining: How we Capture the "Why"

Instead of simply restructuring data and logs in a graph format we’ve created a process that we call Reason Mining. This means extracting the logic behind human actions. For example: when an analyst marks an IP as safe, the Graph creation process does not just record a rule or an analyst decision, but captures the reasoning behind it e.g.: "This IP is safe because John is traveling to Japan this week but we should not always trust it". This turns ephemeral judgment into structured, queryable memory for AI agents.

When an analyst closes a case, Mate doesn't just capture the verdict. It analyzes the investigation path, the comments, the tools queried, and the context at the time of the decision to reconstruct the reasoning chain. This is what becomes a Memory.

We know human judgment isn’t perfect. That’s why the Graph isn't a static recording device. The Graph does not treat past analyst decisions as absolute truths or permanent allow-lists. Instead, it treats them as historical evidence with attached confidence levels. If an analyst marked an IP as safe, the Graph records the specific circumstances of that decision (e.g., 'safe because it was a managed range at that time'). Crucially, this confidence decays over time. A decision made six months ago holds significantly less weight than one made today by 5 different analysts in the team. The Agent weighs this decaying historical context against live data, ensuring that old assumptions never blindly override present threats."

Hypergraph Architecture: Overriding the Risks of Rigid Rules

Traditional approaches model security relationships as binary: trusted or not, normal or not. Real security decisions aren't binary. A login can be normal for this user, from this location, during business hours, on a managed device, but suspicious if any one of those conditions changes. Our graph captures all of those conditions simultaneously, so the agent reasons about the full picture, not a simplified yes/no. In “old world” data cyber security relationships are often a binary yes/no. This is a rigid approach which often creates new risks, which I covered extensively in another post.

Take our previous example: a rigid rule might say “John often logs in from Tokyo; ignore alerts”. But what if John is not in Tokyo today? How would an analyst validate it in this SPECIFIC organization? A good, experienced analyst will catch this. The hypergraph structure ensures that agents are sensitive to these multi-relation nuances and are not misled by such rules which may not be applicable right now. This dramatically increases precision and minimizes wrong verdicts.

Dynamic Graph Reconstruction:

In a SOC, context changes every minute. Asset ownerships constantly change, SOPs are rewritten, people travel, a risk that was accepted last year might be unacceptable today due to new regulations. A comment that an analyst made an hour ago may impact the decisions for an incident happening now. Therefore, our Graph reconstructs continuously, based on new context coming in, to reflect the context of the specific decision the agent needs to make right now. So in case of John’s suspicious login from Tokyo, the graph would be reconstructed to reflect the most updated travel plan. This doesn’t mean the history is forgotten, but now it has more updated context, to tell the full story: What happened at each point in time, and under which circumstances.

A second, operationally real example is addressing the loss of human context in Infrastructure as Code (IaC) deployments. A log alert fires because a sensitive cloud resource, such as a database security group, was modified. The low-level cloud logs will only show the change was made by the CI/CD service account (e.g., the identity used by Terraform). This is the classic identity impersonation problem that cripples SOC investigations. Our Graph instantly reconstructs, pulling in context from systems like Jira, Git, or Slack, connecting the service account action to the human Reason behind it: the Git commit hash, the JIRA ticket ID, or the engineer's comment approving the change. The agent immediately transforms an opaque machine log into a clear verdict: "Change was initiated by Engineer Jane Doe via approved JIRA ticket-456." This capability allows the agent to distinguish a routine, approved IaC change from a truly elevated-risk scenario, all without a human analyst manually chasing a service account ID across multiple siloed systems.

Operational Impact

The business outcomes of using a data-first approach are massive and the market feedback is very positive so far. What we are hearing from CISOs and SOC managers is:

- Significantly improved precision of verdicts: AI agent reasoning dramatically improves, agents can answer more complex questions and can make judgments based on the most delicate nuances of the current situation, for example: ”this unusual port activity is related to the new integration going live this week so it is likely legit”.

- Consistency: by mapping context and achieving very high granularity, the Graph enables agents to pinpoint the most relevant scenario to the alert, and investigate consistently rather than “reinventing the wheel” for the same incident.

- Transparency & Root Cause Analysis: analysts can drill down through layers of historical decisions to understand agent reasoning. Explanations and recommendations are provided in easy to understand language.

- Adaptability: agents are resilient to frequent changes in policies, ownerships and best practices as the Graph is continuously constructed and adjusted to the most recent organizational context. For example: an agent would be aware of a security posture change due to recent threats, meaning that an alert would no longer be considered benign.

- Institutional Knowledge Retention: the Security Context Graph captures the organization's memory - the nuances and expertise of the most experienced analysts, retains it, uses it for future investigations, and can even “onboard” new analysts. Memory is no longer lost when analysts leave.

What’s Next?

The Security Context Graph is powering Mate’s agents at Fortune 500 SOCs and evolving every day. We are very excited by the results so far. Clearly, this approach has massive potential beyond the SOC and across multiple cyber security categories. Today it is enabling agents to rebuild trust, just like we imagined when we founded Mate Security.

.avif)